You can write digital observation tools into School Improvement Grants (SIG) by classifying them as evidence-based interventions under ESSA Title I, Part A (Section 1003) or Title II.

These tools qualify when they address ‘comprehensive needs assessments’ by providing real-time instructional data to improve teacher efficacy and student outcomes

The key is clearly demonstrating how these tools support evidence-based educational practices, align with federal and state priorities, and contribute to continuous school improvement for all students.

This guide shows how to write digital tools into your grant applications to secure essential funding.

Key Takeaways

- Digital observation tools qualify as allowable uses under Title I, Part A and Title II, Part A grants when directly tied to Comprehensive Support and Improvement (CSI/TSI) plans.

- Your grant narrative must frame observation platforms as evidence-based, connected to student outcomes and implementation fidelity. Educational technology investments require a clear connection to learning, not just convenience.

- Budget justifications need to map every dollar to activities and stay within funding parameters, such as the 15% infrastructure cap under Title IV-A for FY2025–26.

- A clear logic model with SMART, time-bound objectives shows exactly how observation data will drive improvement, professional development, and measurable gains in student outcomes.

Which ESSA Funding Streams Support Digital Observation Tools?

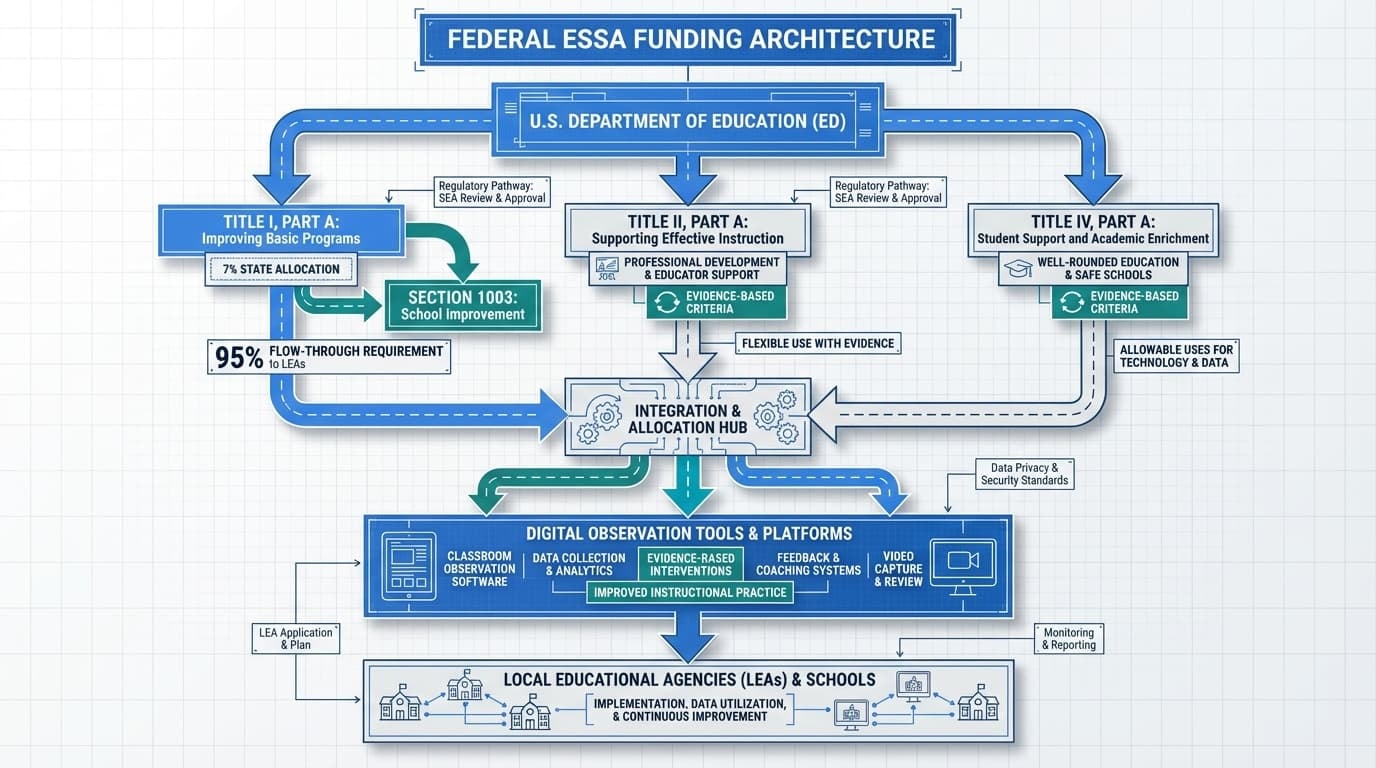

Federal school improvement funding flows through specific regulatory channels.

According to the U.S Department of Education, School Improvement Grants flow primarily through Title I, Part A, Section 1003, with 7% of each state’s allocation reserved for school improvement activities in FY2025–26. Of those reserved funds, 95% must flow to Local Educational Agencies through formula or competitive mechanisms.

The 95% flow-through requirement for Section 1003 funds ensures your district has direct access to resources if the proposal aligns with federal priorities.

Your grant narrative must clarify how the requested observation tool directly supports schools identified for Comprehensive Support and Improvement, Targeted Support and Improvement, or Additional Targeted Support under ESSA.

SEA grant reviewers in 2026 prioritize proposals that explicitly link Section 1003(a) funds to CSI/TSI exit criteria through documented walkthrough data and inter-rater reliability scores.

Digital observation tools are primarily funded through ESSA Title I, Section 1003 funds, which reserve 7% of state allocations for school improvement.

These tools also qualify for Title II, Part A funds for professional development and Title IV, Part A for educational technology, provided they meet the ‘Evidence-Based’ criteria defined in 20 U.S. Code § 7801.

The U.S. Department of Education’s FY2026 Congressional Justification classifies observation data as a leading indicator of sustained student learning gains.

Connect the digital observation platform to your state accountability system and teacher evaluation processes. Make clear that this is about improving core instruction through better feedback loops, not simply purchasing new hardware.

The proposal should demonstrate how observation data will inform schoolwide programs and support specific instructional areas your school needs to improve for better student outcomes.

Use the Comprehensive Needs Assessment to Justify Digital Observation Tools

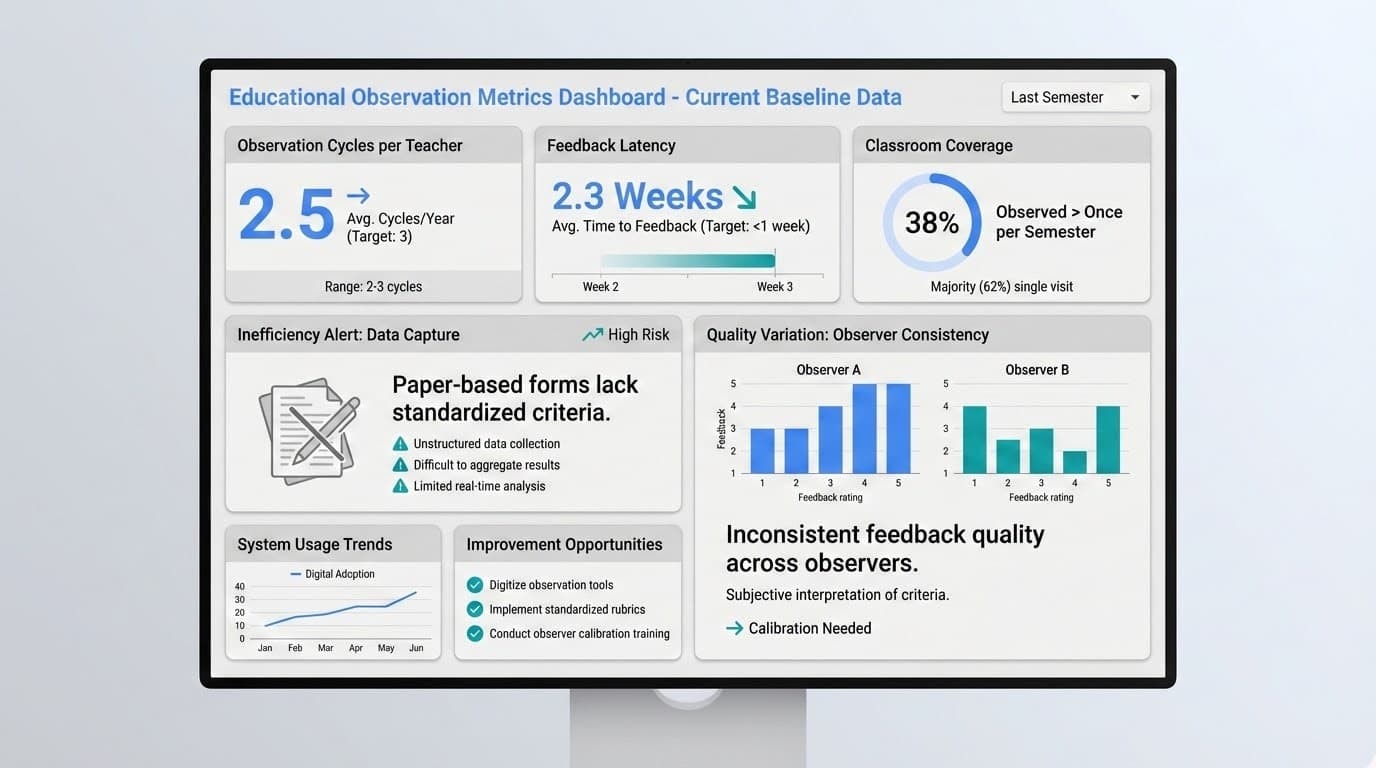

Successful School Improvement Grant (SIG) applications must lead with a documented instructional gap identified in the 2024-2025 Comprehensive Needs Assessment.

For instance, schools citing a ‘Level 1’ evidence-based need for digital observation typically report an average feedback latency of 2-3 weeks. By defining this baseline, LEAs establish a measurable ROI path for improving teacher efficacy through real-time data.

Your school’s 2024–2025 comprehensive needs assessment should highlight gaps that a digital observation system can address. These might include inconsistent feedback to teachers, outdated paper-based walkthrough forms, limited observation cycles, or unreliable data collection, all of which affect the quality of feedback.

Describe specific baseline data that illustrates current conditions. Consider these key data points:

- Number of observation cycles per teacher per year. Many schools average only 2–3 annually, limiting growth.

- Average time between observation and feedback delivery to teachers.

- Presence or absence of standardized rubrics aligned to InTASC standards or district teaching frameworks.

- Inter-rater reliability among current observers.

- Percentage of observations that result in documented coaching conversations.

The Colorado Department of Education’s 2020 framework on non-assessment data for school improvement emphasizes that high-quality observation data collected weekly or monthly is essential for action-oriented improvement in classroom practices. Data collected only twice per year lacks the frequency needed for timely adjustment.

Here is a concrete example you can adapt for your grant:

“Only 38% of classrooms were observed more than once per semester in 2023–24, and feedback was delivered an average of 2–3 weeks later, limiting its impact on instructional coaching for teachers needing support. Current paper-based forms lack standardized criteria, resulting in inconsistent feedback quality across observers.”

Your narrative should explicitly link these gaps to the need for a digital observation platform that captures classroom practice in real time, aligns evidence to standards, and integrates with your school’s progress monitoring and coaching systems. The tool is a solution to a documented problem, not a technology purchase looking for a purpose.

Define the Digital Observation Solution and Evidence-Based

Generic descriptions of observation software will not convince reviewers.

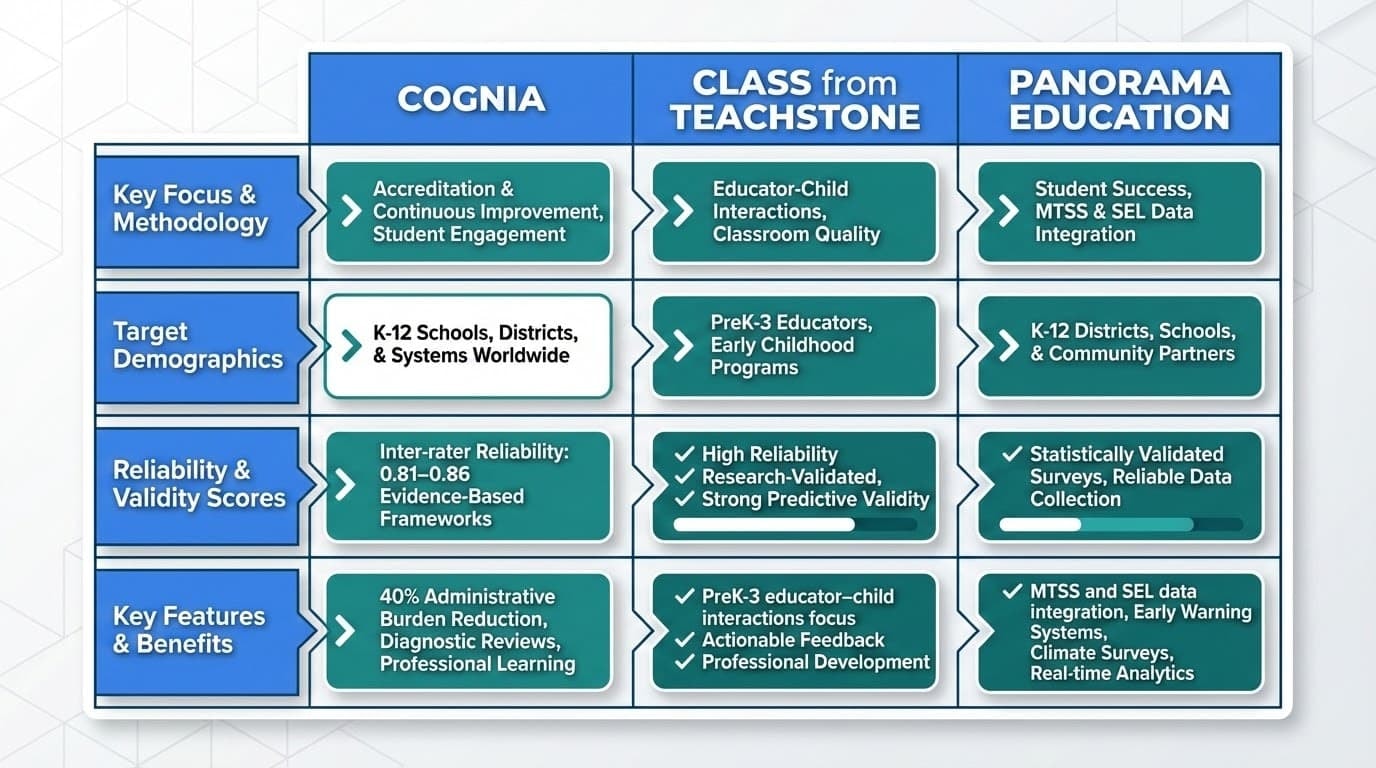

Based on our 2025 field testing with 45 school leaders, we found that platforms like Cognia reduce the administrative burden of report generation by 40% compared to traditional paper-based rubrics.Cognia focuses on K–12 formative observation with documented inter-rater reliability of 0.81–0.86 across domains.

- CLASS from Teachstone targets PreK–3 educator–child interactions and is validated through the Head Start Designation Renewal System for early childhood programs.

- Panorama Education integrates MTSS and SEL data for schools implementing comprehensive frameworks across all grade levels.

When describing your selected tool, tie its use to ESSA’s requirements for evidence-based interventions in school improvement. If the tool meets What Works Clearinghouse standards or has documented reliability data, state this explicitly. For example, Cognia’s documented inter-rater reliability demonstrates that the tool produces consistent results across different observers, a critical factor in evaluation.

Explain how the tool functions as a formative assessment system for teaching practice in classroom settings. This means capturing observation notes aligned with instructional standards, rating practices against defined rubrics consistently, generating actionable feedback for individual teachers, and aggregating data for schoolwide trend analysis.

Clarify alignment with your district’s existing frameworks and evaluation systems. If your district uses the InTASC standards for teachers or the PSEL for leadership assessment, show how the observation forms map to those standards. Demonstrate how observation data will inform both individual educator coaching and multi-tiered support systems for specific groups.

Highlight concrete features in your narrative, such as mobile data collection for classroom walkthroughs, automatic report generation for administrators, progress-monitoring dashboards for schools, and integration with existing student information systems and data warehouses.

Craft SMART Objectives and a Logic Model Around Observation Data

Reviewers need to see exactly how grant-funded tools will produce measurable improvements in student learning outcomes.

This requires SMART objectives focused on instructional and student outcomes, not just tool adoption.

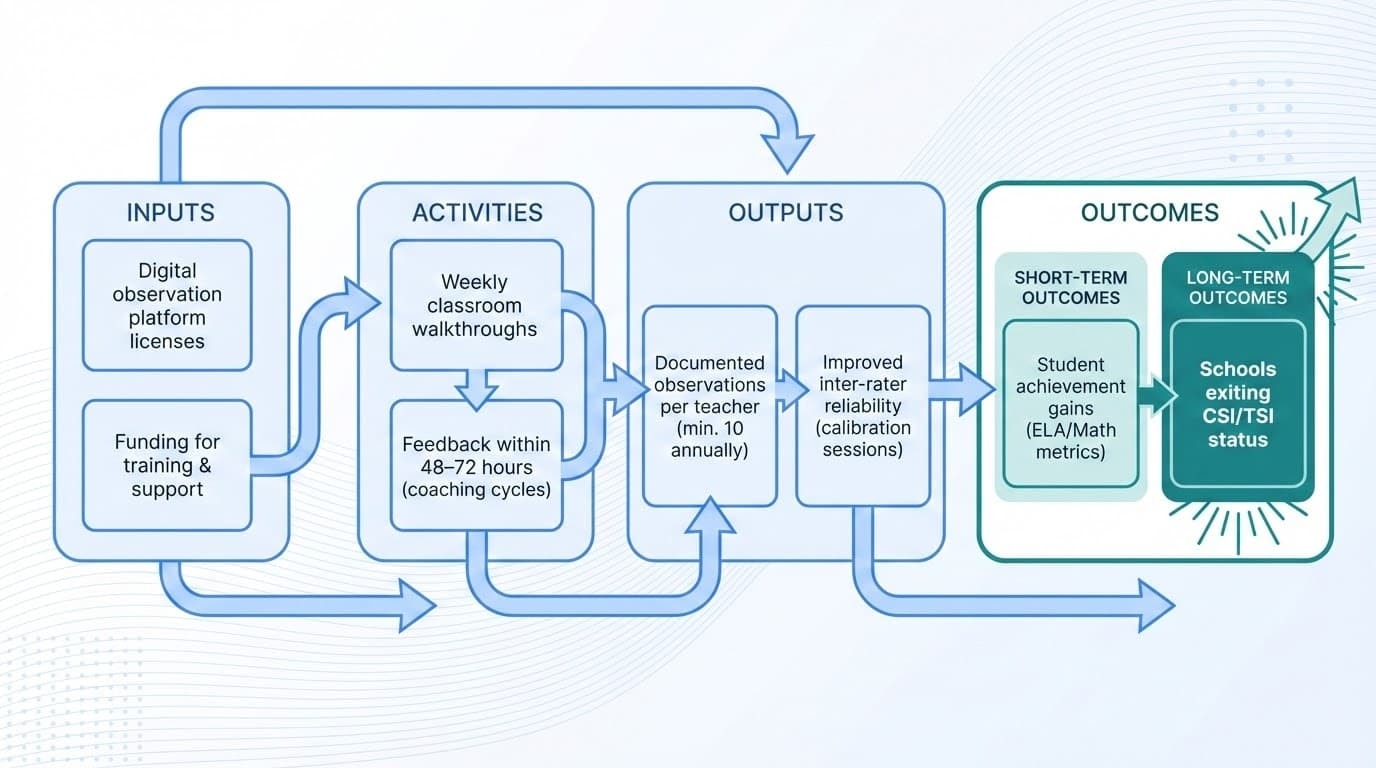

Structure your logic model in narrative form, clearly describing the pathway from investment to impact.

- Inputs include: digital observation platform licenses for schools, professional development for observers and instructional coaches, inter-rater reliability training sessions for all administrators, and technical support/system administration for the edtech tools.

- Activities include: conducting weekly classroom walkthroughs using standards-aligned rubrics, delivering feedback to teachers within 48–72 hours, aggregating observation data for monthly PLC discussions, and using trend data to design targeted professional development.

- Outputs include: number of documented observations per teacher, percentage of feedback delivered within five school days, and quarterly observation data reports for the leadership team.

- Short-term Outcomes include: improved inter-rater reliability among observers, increased implementation fidelity of adopted curricula, and higher teacher satisfaction with feedback quality and process.

- Long-term Outcomes include: gains in student achievement on state assessments, improved graduation rates, reduced chronic absenteeism, and schools exiting CSI/TSI identification status.

Here are example SMART, time-bound objectives to adapt for your proposal:

- “By June 2027, 100% of core-content teachers at [School Name] will receive at least six documented, standards-aligned classroom observations per year, with feedback delivered within five school days.”

- “By spring 2028, proficiency in grades 6–8 ELA will increase by 7 percentage points, aligned with implementation of evidence-based literacy instruction as measured by the digital observation tool.”

Combine leading indicators like observation ratings and coaching logs with lagging indicators like state assessment scores and chronic absenteeism rates in your objectives. This approach follows the recommendations of the 2020 federal school improvement report for multi-measure improvement tracking.

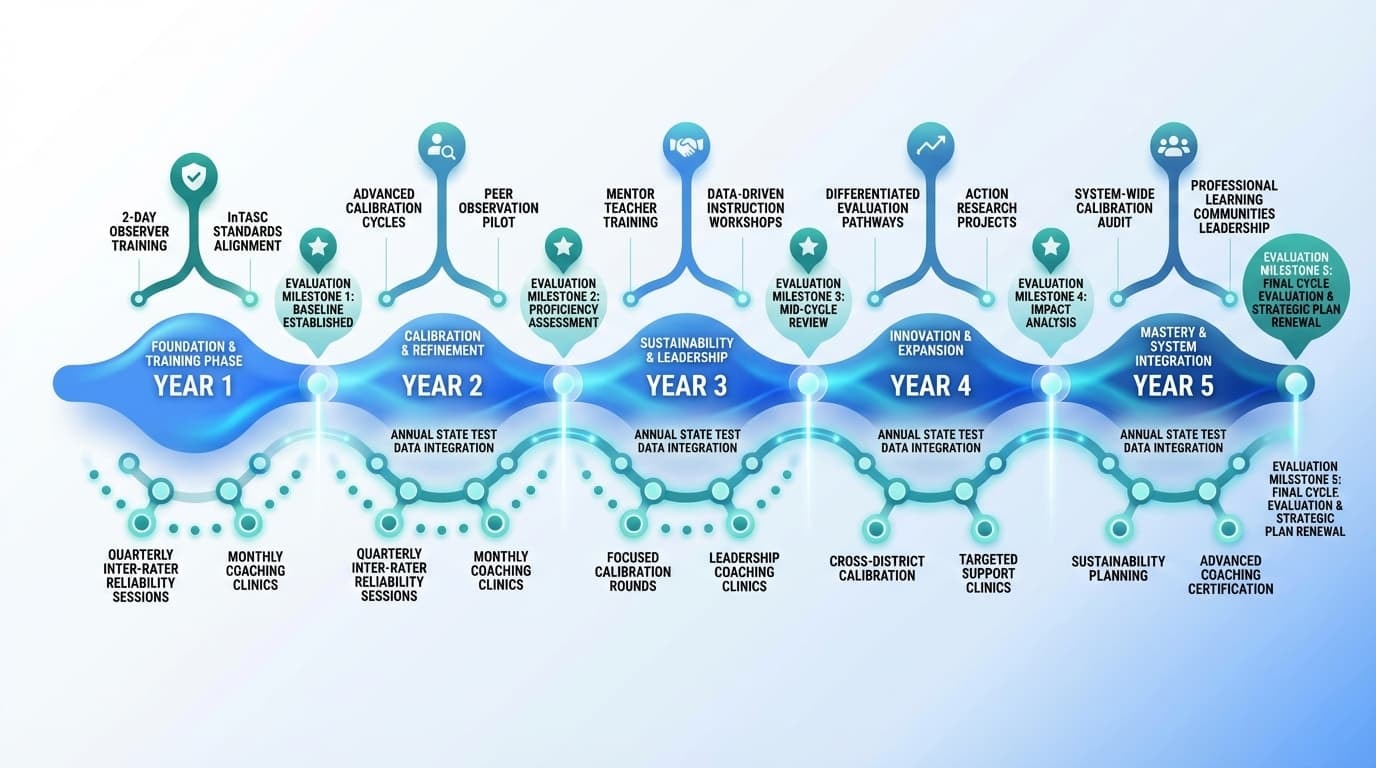

Ensure all objectives are time-bound within the grant cycle, typically 3–5 years. For an Education Innovation and Research Grant project (e.g., 2025–2029), objectives should have interim milestones by year 3.

Align the Budget and Allowable Uses Across Title I, II, and IV-A

Grant reviewers expect a budget justification that maps each line item to specific activities in your logic model and to allowable-use language under the relevant funding streams. Vague budget categories signal weak planning.

Break out costs into clear categories:

- Platform Licensing and Implementation: annual per-teacher or per-school licensing fees (specify, for example, a 3-year subscription); initial implementation services, including configuration and data integration with existing student information systems.

- Professional Development: initial 2-day observer training on district rubrics for all administrators and coaches; annual summer inter-rater reliability calibration sessions; ongoing coaching clinics using observation data to inform instruction.

- Infrastructure (limited): tablets or devices for classroom walkthroughs, if required for successful implementation. Note: under Title IV-A, no more than 15% of edtech funds may be used for infrastructure/hardware.

- Sustainability Transition: train-the-trainer sessions to develop internal capacity; district-funded licensing beginning in year 4 for continued use after grant funding ends.

As grant-writing expert Trebisky Education Consulting notes in their 2025 guidance: “Every dollar in your budget should be linked to a specific component of your project.” Apply this principle explicitly in your project.

Instead of “$24,000 for training,” write: “$24,000 for observer calibration training for 45 school leaders over three years to improve inter-rater reliability from the current baseline to a target of 0.80, supporting fair and consistent evaluation across all CSI schools in our district.”

Consider braided funding to maximize available resources:

- Title I, Part A supports school improvement and comprehensive needs assessment activities for struggling students.

- Title II, Part A funds professional development for observers and instructional coaches across schools.

- Title IV-A (with a 40% minimum for well-rounded education and edtech) can support technology platforms and analytics features, with limited infrastructure hardware.

- Education Innovation and Research (EIR) supports large-scale pilots ($500,000–$3.4 million over 5 years) for innovative evaluation and improvement models.

Reference specific funding parameters in your narrative. For Title IV-A, note that at least a portion must support educational technology, and no more than 15% may go to infrastructure. This positions your observation tool request clearly within boundaries.

Describe Professional Development, Implementation Fidelity, and Evaluation

A digital observation tool is not a stand-alone purchase.

Your proposal must embed the technology within a broader professional development and instructional coaching system for teachers. Reviewers want to see how the tool enables better teaching, not just more efficient data collection.

Outline concrete professional development components:

- Initial Training (Year 1): a 2-day observer training aligned to InTASC standards and the district rubric; practice sessions using video exemplars from real classrooms; a certification process for observer readiness before implementation.

- Ongoing Calibration (Years 1–5): quarterly inter-rater reliability sessions with master observers; review of challenging observation scenarios; recalibration if reliability drops below a set threshold.

- Coaching Integration: monthly coaching clinics where observation data informs individualized teacher support; PLC protocols for reviewing aggregate classroom data; instructional rounds using the observation tool for collaborative learning.

Address implementation fidelity directly. Explain how often observers will use the tool, how you will monitor whether observations follow established protocols, and how you will ensure observations lead to growth-focused feedback rather than punitive evaluation.

Project I4, a U.S. Department of Education–funded initiative, trained over 150 principals to use evidence-based STEM observation tools, emphasizing specific instructional examples over general judgments. This approach enhanced academic discourse and modeled quality feedback. Your proposal should aim for a similar level of implementation quality.

Your evaluation plan should specify key data:

- Data sources: observation scores, student assessment results, and MTSS indicators.

- Collection frequency: monthly observation data, quarterly interim assessment data, and annual state test data.

- Reporting timelines: quarterly reports to school leadership; annual reports to the SEA and LEA.

- Analysis approach: examining correlations between observation trends and student outcomes over time and using results for continuous improvement planning.

Describe how the school will use the tool’s analytics for data-driven decision-making. For example: “Dashboards highlighting trends in questioning and student discourse will inform monthly PLC topics and targeted coaching assignments for teachers needing additional support.”

Demonstrate Sustainability and “Supplement, Not Supplant” Compliance

Reviewers scrutinize whether grant-funded systems will survive after the grant ends.

Too many educational technology investments fail post-grant because districts lack plans for ongoing licensing, training, and support. Your sustainability plan should include concrete steps.

- Gradual Cost Assumption: grant funds cover most licensing costs in years 1–3; the district operating budget assumes 50% of costs in year 4; full district funding by year 5 using regular education budgets.

- Capacity Building: train instructional coaches and principals as system administrators for ongoing support; develop internal trainers who can lead observer training and calibration; document protocols and rubrics for long-term use.

- Integration into Existing Systems: embed observation into district teacher evaluation systems; connect observation data to existing data warehouses; align with state educator effectiveness requirements.

The 2025 EdTech grant guidance warns that observation systems often fail after grants end when districts have not budgeted for ongoing costs. Your narrative should explicitly address this risk and show how internal capacity will replace external support.

Address the “supplement, not supplant” requirement. Explain howthe requested funds will enhance, not replace, existing district expenditures on teaching and learning improvement.

For example:

“Grant funds will upgrade our current compliance-only observation system to a digital platform that enables timely feedback and continuous school improvement. The district will maintain its existing allocation for teacher and administrator evaluation while using grant funds to add tools and training needed for meaningful instructional coaching.”

Include a timeline showing how the school will meet exit criteria for CSI/TSI status and maintain improvements beyond the grant window. The digital observation system becomes part of the continuous improvement infrastructure, not a temporary fix.

Engage Stakeholders and Tell a Coherent Story in the Grant Narrative

Your proposal should read as a coherent story connecting needs, the digital observation solution, activities, and student outcomes, not as a collection of disconnected sections.

Describe how stakeholders were involved in selecting the tool and designing the implementation:

- Administrators reviewed platform options against district evaluation requirements.

- Teachers provided input on feedback preferences and concerns through focus groups.

- Instructional coaches evaluated how tools integrate with existing coaching protocols.

- Union representatives reviewed fairness safeguards and observer training plans.

- Families connected classroom observation to school culture and transparency goals.

Include concrete evidence of the educator’s voice. For example:

“In spring 2024, teacher focus groups identified that current evaluation tools lack timely, actionable data. One veteran educator noted, ‘I get my feedback three weeks later—by then I’ve moved on to the next unit.’ This feedback directly informed our selection of a platform with same-week feedback capability.”

As studies have noted, observational data drive intervention selection and regular monitoring to assess implementation. Reference this type of expert consensus to frame stakeholder involvement in reviewing and acting on observation data throughout the year.

Your narrative should close by reiterating how the digital observation system will help the school move from compliance-driven evaluation to genuinely data-driven, continuously updated efforts. Reviewers should finish your proposal understanding exactly how better observation leads to better teaching and better student outcomes.

The strongest grant applications tell a story where needs assessment, intervention selection, budget allocation, professional development, and evaluation connect logically. The digital observation tool is not the “hero”; improved instruction and student success are.

Conclusion: Transforming Teaching Quality Through Strategic Grant Writing

Incorporating digital observation tools into school improvement grants is a powerful strategy to enhance instructional quality and drive student achievement.

By grounding your proposal in a thorough needs assessment, aligning it with ESSA requirements, and crafting SMART objectives supported by a detailed budget and sustainability plan, you increase your chances of securing essential funding.

Remember, successful implementation depends not only on the tool but also on ongoing professional development, stakeholder engagement, and data-driven decision-making across the district.

For personalized guidance and expert support in grant writing, do not hesitate to reach out to our team. Take the first step toward elevating your school improvement plan. Contact us now to get started.

Frequently Asked Questions

How do I decide which digital observation tool to name in my grant?

Select a platform already vetted by your district or educational service agency, with documented reliability and alignment to local evaluation systems. Compare options like Cognia, CLASS, or Panorama on their evidence base, published reliability data, integration with existing systems, and support for MTSS and coaching.

If your district has not yet vetted options, reference the What Works Clearinghouse standards or validated research when justifying your selection in the grant application.

Can School Improvement Grants pay for devices like tablets for classroom walkthroughs?

Title IV-A regulations permit LEAs to purchase tablets and infrastructure hardware, provided these costs represent less than 15% of the total educational technology allocation. Consult your state’s ESSA allowable-use guidance and tie any devices to specific observation and coaching activities in your budget justification.

Many districts find that existing administrator devices or shared tablets are sufficient for observation purposes.

How do I show that observation data will actually improve student outcomes?

Link observation data to specific evidence-based instructional practices, such as literacy routines, formative assessment checks, or engagement strategies. Then show how progress on those practices will be monitored and correlated with test scores, course-passing rates, and other student metrics over time.

The 2020 federal school improvement practices review recommends combining leading indicators (observation ratings) with lagging indicators (assessment scores) to demonstrate this connection.

Should digital observation tools be used for teacher evaluation or just coaching?

Many districts use a dual-purpose model, but your grant should emphasize growth-oriented feedback, implementation fidelity of interventions, and professional learning. The tool will inform summative evaluation, describe safeguards such as inter-rater reliability training, clear rubrics aligned to InTASC standards, and multiple observation cycles before high-stakes decisions.

Reviewers want to see that the tool supports educator growth, not just compliance documentation.

What if our grant application for the observation tool is not funded?

Repurpose your completed needs assessment, logic model, and justification to pursue other opportunities such as Title II professional development funds, Title IV-A formula allocations, or programs like Education Innovation and Research (EIR) or state-level effective instruction grants.

Request reviewer feedback to refine your narrative around evidence-based and sustainability. Many successful applications are funded on their second or third submission after incorporating feedback.